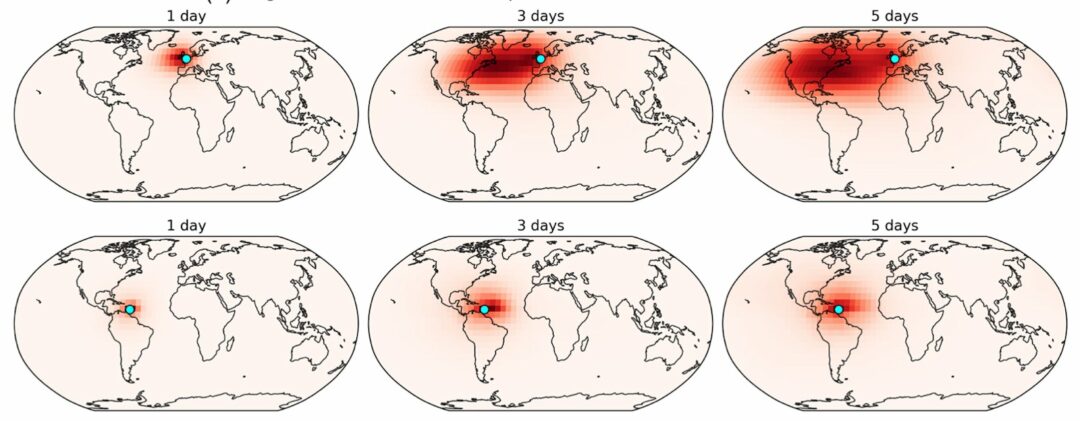

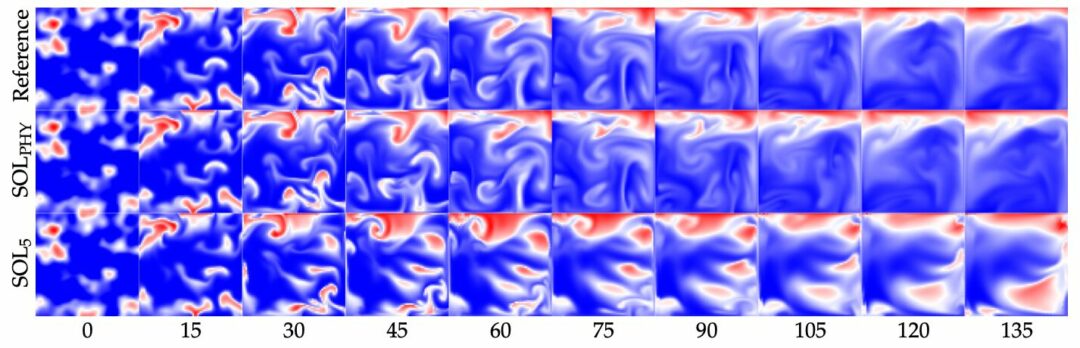

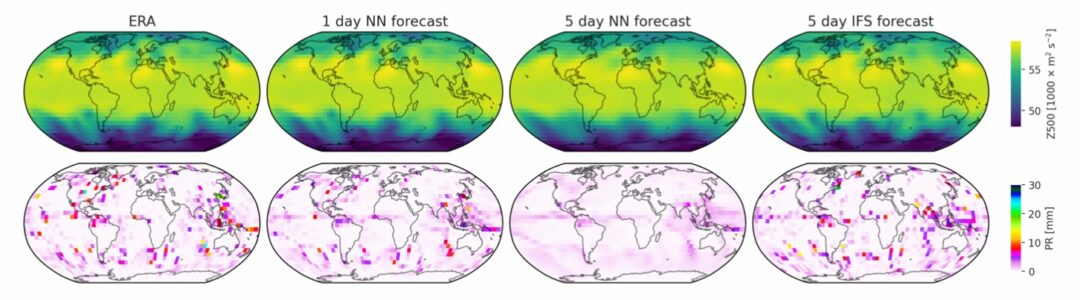

Our own entry in the WeatherBench benchmark is now published in the Journal of Advances in Modeling Earth Systems. It outperforms existing works with an RMSE of 268 and 499 for 3 and 5 day Z500 forecasts, respectively. It’s also at least on-par with a full traditional model running at a similar resolution. That being said – it’s still clearly falling behind the operational forecasting reference. Hopefully, it will inspire more people to join the WeatherBench challenge, and further improve the forecasts!

The full article can be read here, and the current leaderboard for WeatherBench can be found on the corresponding GitHub page.

Paper Abstract: Numerical weather prediction has traditionally been based on the models that discretize the dynamical and physical equations of the atmosphere. Recently, however, the rise of deep learning has created increased interest in purely data‐driven medium‐range weather forecasting with first studies exploring the feasibility of such an approach. To accelerate progress in this area, the WeatherBench benchmark challenge was defined. Here, we train a deep residual convolutional neural network (Resnet) to predict geopotential, temperature and precipitation at 5.625° resolution up to 5 days ahead. To avoid overfitting and improve forecast skill, we pretrain the model using historical climate model output before fine‐tuning on reanalysis data. The resulting forecasts outperform previous submissions to WeatherBench and are comparable in skill to a physical baseline at similar resolution. We also analyze how the neural network makes its predictions and find that the model has learned reasonable physically reasonable correlations.