We are happy to announce two upcoming spotlight presentations, and one poster at ICLR 2020:

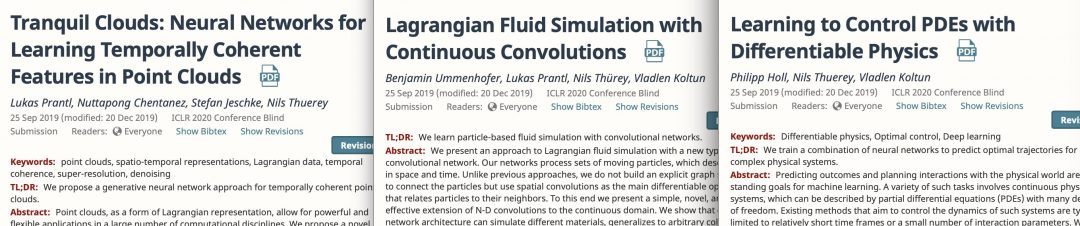

– Tranquil Clouds: Neural Networks for Learning Temporally Coherent Features in Point Clouds

– Lagrangian Fluid Simulation with Continuous Convolutions , and

– Learning to Control PDEs with Differentiable Physics.

Not surprisingly, all three focus on physics-based deep learning techniques and Navier-Stokes problems – the first two of them employ Lagrangian methods, the while the third one focuses on differentiable simulations, with a particular focus on long-term temporal stability. Abstracts follow below…

Tranquil Clouds: Neural Networks for Learning Temporally Coherent Features in Point Clouds: Point clouds, as a form of Lagrangian representation, allow for powerful and flexible applications in a large number of computational disciplines. We propose a novel deep-learning method to learn stable and temporally coherent feature spaces for points clouds that change over time. We identify a set of inherent problems with these approaches: without knowledge of the time dimension, the inferred solutions can exhibit strong flickering, and easy solutions to suppress this flickering can result in undesirable local minima that manifest themselves as halo structures. We propose a novel temporal loss function that takes into account higher time derivatives of the point positions, and encourages mingling, i.e., to prevent the aforementioned halos. We combine these techniques in a super-resolution method with a truncation approach to flexibly adapt the size of the generated positions. We show that our method works for large, deforming point sets from different sources to demonstrate the flexibility of our approach.

Lagrangian Fluid Simulation with Continuous Convolutions: We present an approach to Lagrangian fluid simulation with a new type of convolutional network. Our networks process sets of moving particles, which describe fluids in space and time. Unlike previous approaches, we do not build an explicit graph structure to connect the particles but use spatial convolutions as the main differentiable operation that relates particles to their neighbors. To this end we present a simple, novel, and effective extension of N-D convolutions to the continuous domain. We show that our network architecture can simulate different materials, generalizes to arbitrary collision geometries, and can be used for inverse problems. In addition, we demonstrate that our continuous convolutions outperform prior formulations in terms of accuracy and speed.

Learning to Control PDEs with Differentiable Physics: Predicting outcomes and planning interactions with the physical world are long-standing goals for machine learning. A variety of such tasks involves continuous physical systems, which can be described by partial differential equations (PDEs) with many degrees of freedom. Existing methods that aim to control the dynamics of such systems are typically limited to relatively short time frames or a small number of interaction parameters. We show that by using a differentiable PDE solver in conjunction with a novel predictor-corrector scheme, we can train neural networks to understand and control complex nonlinear physical systems over long time frames. We demonstrate that our method successfully develops an understanding of complex physical systems and learns to control them for tasks involving multiple PDEs, including the incompressible Navier-Stokes equations.