Our paper on improved and controllable latent-space physics predictions is set to be shown at SCA 2020. It builds on our previous work from EG’19 (Latent-space Physics: Towards Learning the Temporal Evolution of Fluid Flow, Deep Fluids: A Generative Network for Parameterized Fluid Simulations) to improve temporal predictions within the LSTM neural network. In addition, it controls the latent-space content to allow for modifications and improved long-term stability. It will be shown at SCA 2020 soon.

The pre-print and repository can be found at here.

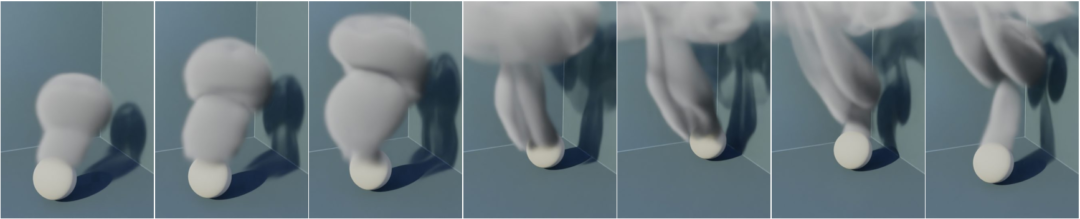

Full abstract: We propose an end-to-end trained neural network architecture to robustly predict the complex dynamics of fluid flows with high temporal stability. We focus on single-phase smoke simulations in 2D and 3D based on the incompressible Navier-Stokes (NS) equations, which are relevant for a wide range of practical problems. To achieve stable predictions for long-term flow sequences, a convolutional neural network (CNN) is trained for spatial compression in combination with a temporal prediction network that consists of stacked Long Short-Term Memory (LSTM) layers. Our core contribution is a novel latent space subdivision (LSS) to separate the respective input quantities into individual parts of the encoded latent space domain. This allows to distinctively alter the encoded quantities without interfering with the remaining latent space values and hence maximizes external control. By selectively overwriting parts of the predicted latent space points, our proposed method is capable to robustly predict long-term sequences of complex physics problems. In addition, we highlight the benefits of a recurrent training on the latent space creation, which is performed by the spatial compression network.