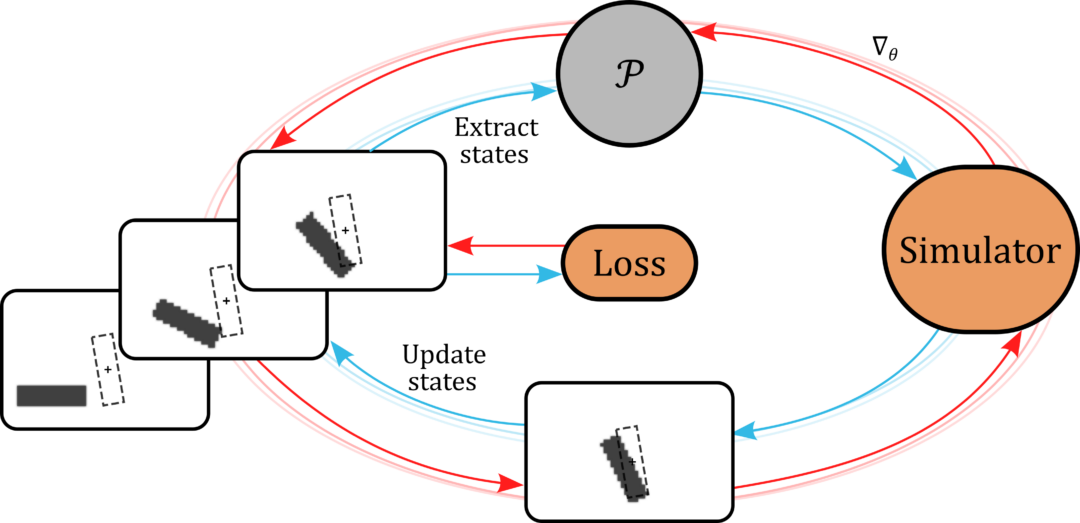

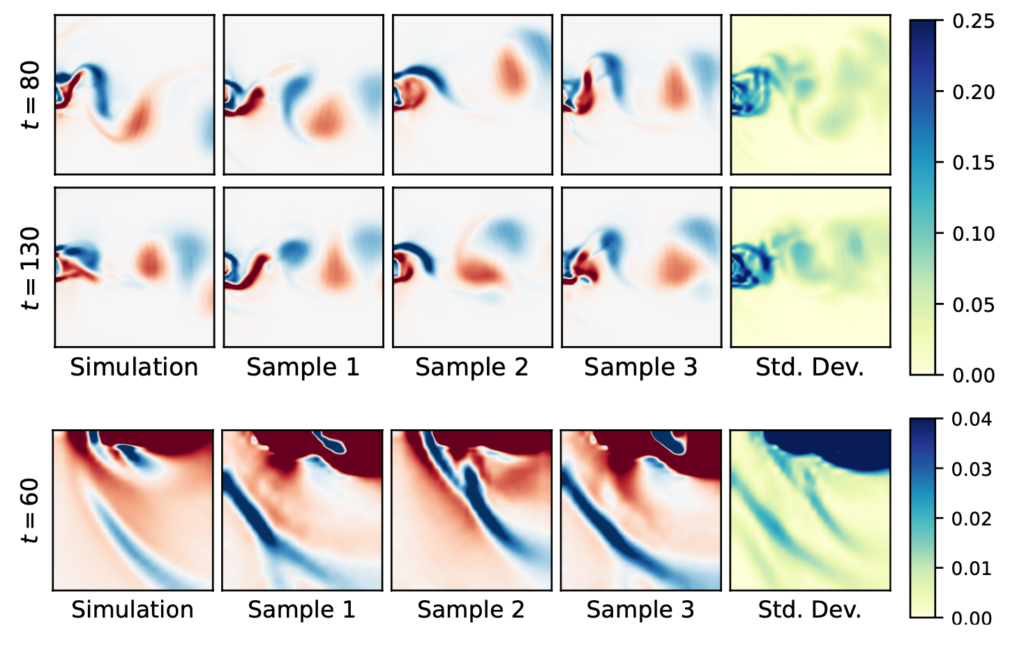

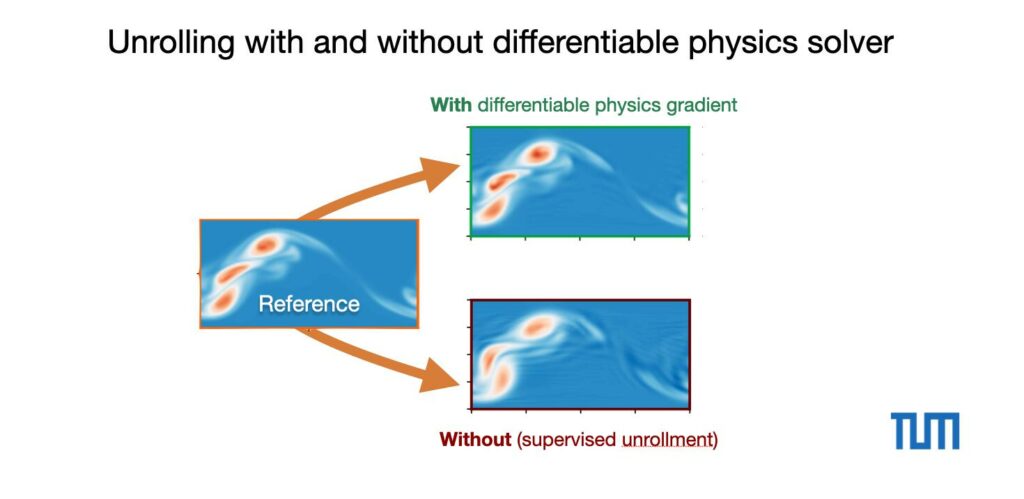

We’re also happy to announce the preprint of our paper on “Physics-Preserving AI-Accelerated Simulations of Plasma Turbulence”: https://arxiv.org/abs/2309.16400 , it’s great to see that training with a differentiable physics solver also yields accurate drift-wave turbulence!

The corresponding source code of Robin Greif’s implementation is also online at: https://github.com/the-rccg/hw2d , it contains a fully differentiable Hasagawa-Wakatani solver implemented with PhiFlow. (And a lot of tools for evaluation on top! )

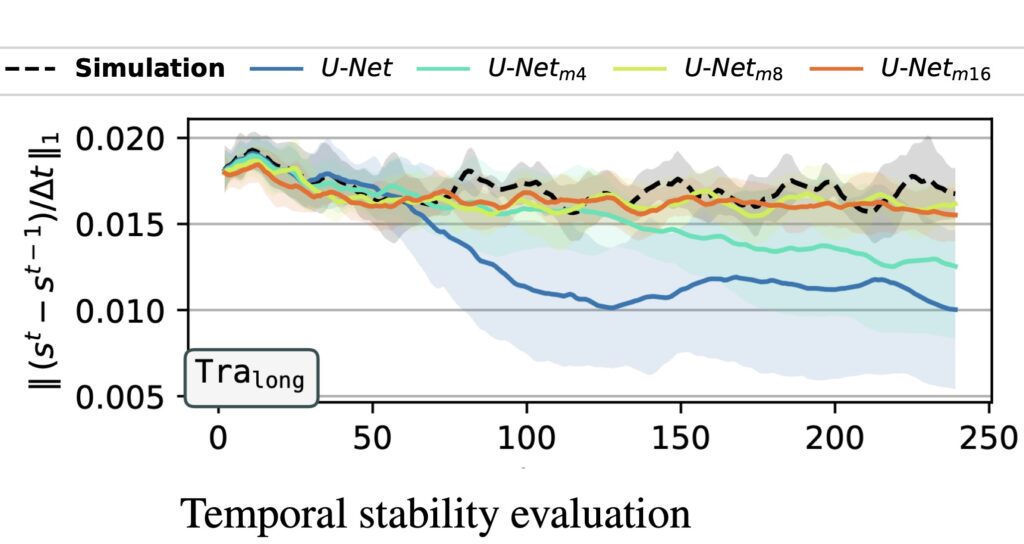

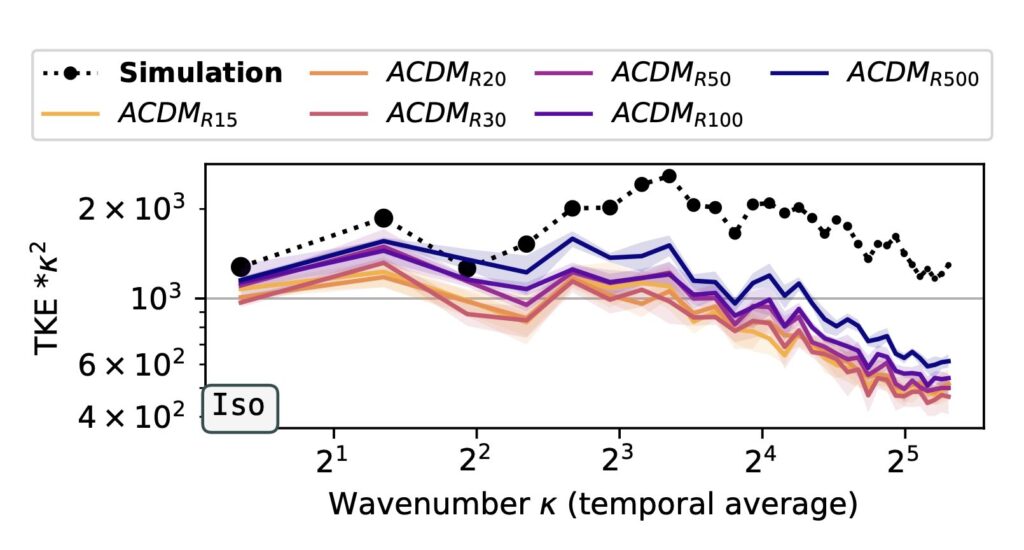

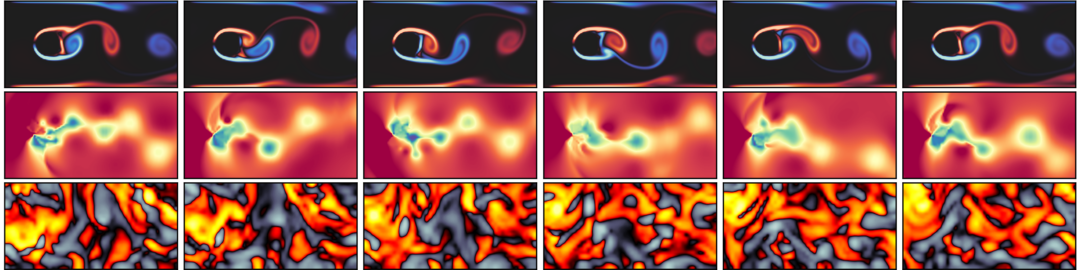

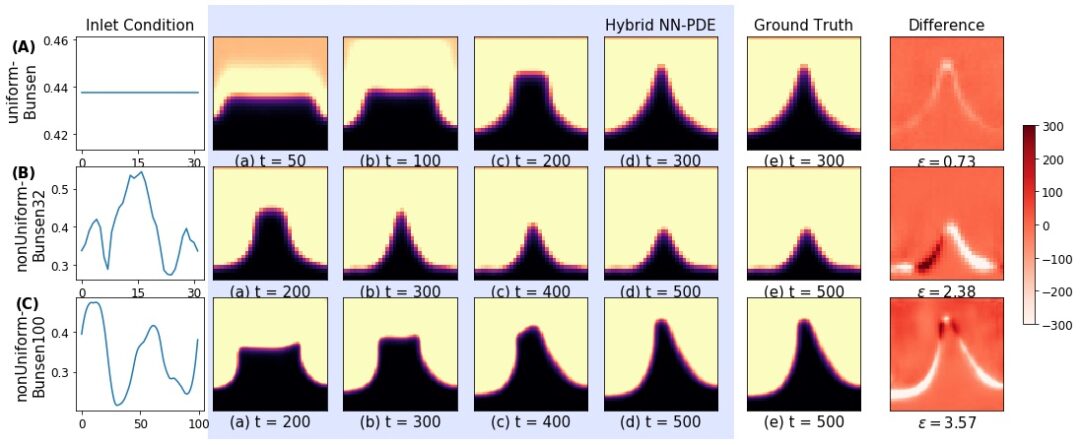

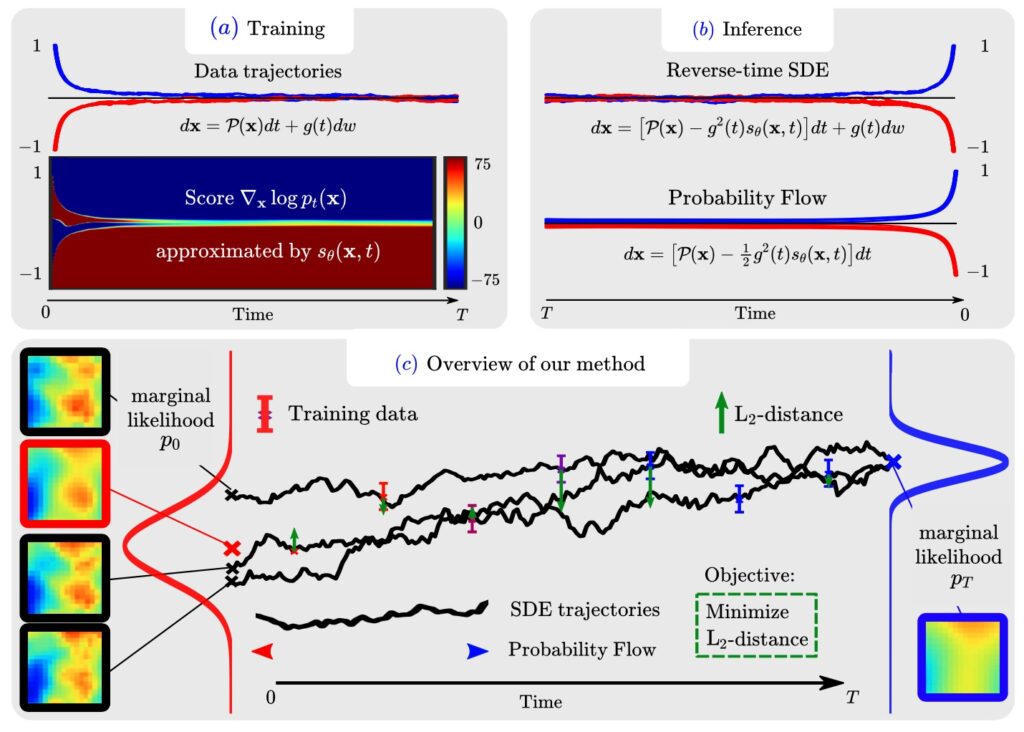

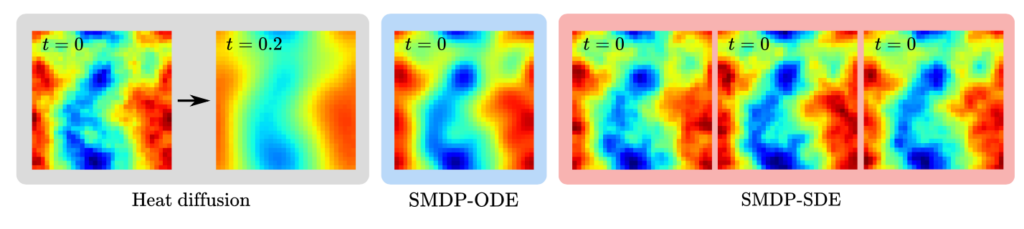

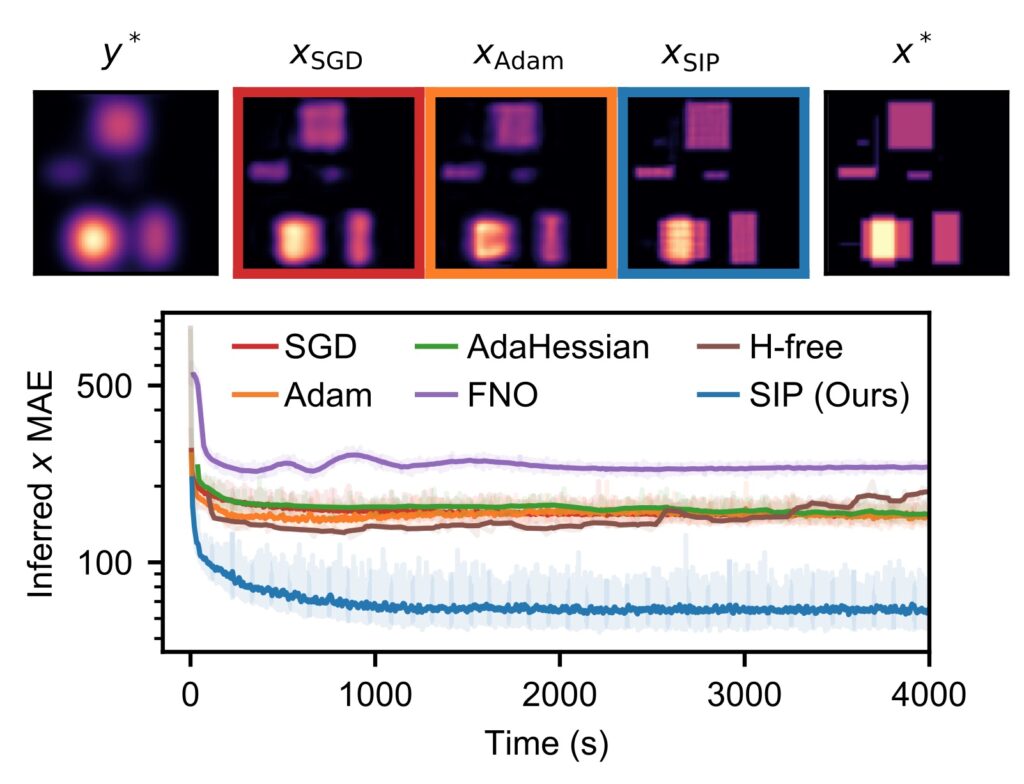

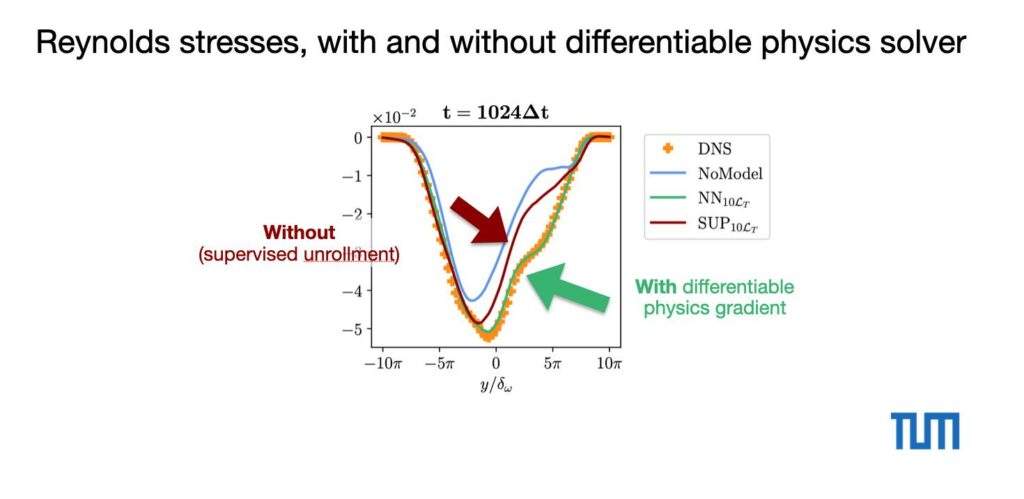

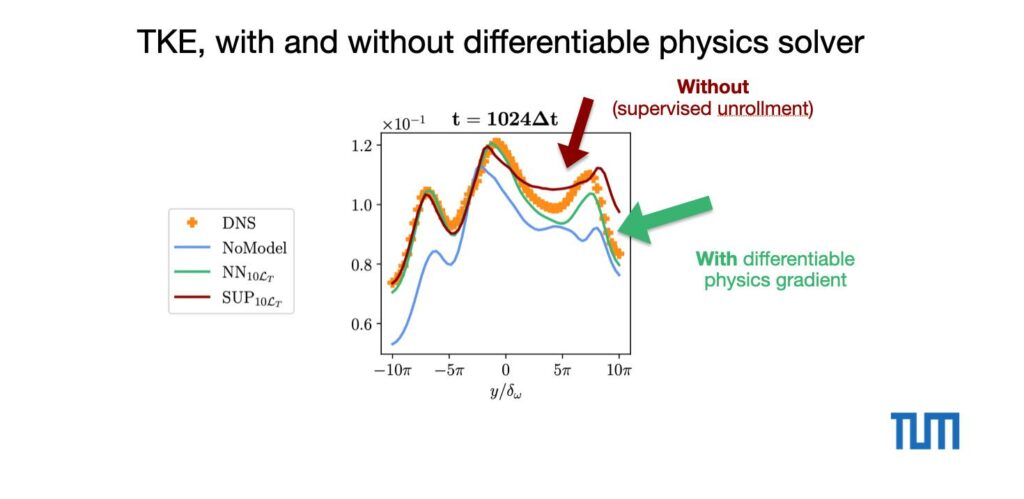

Full paper abstract: Turbulence in fluids, gases, and plasmas remains an open problem of both practical and fundamental importance. Its irreducible complexity usually cannot be tackled computationally in a brute-force style. Here, we combine Large Eddy Simulation (LES) techniques with Machine Learning (ML) to retain only the largest dynamics explicitly, while small-scale dynamics are described by an ML-based sub-grid-scale model. Applying this novel approach to self-driven plasma turbulence allows us to remove large parts of the inertial range, reducing the computational effort by about three orders of magnitude, while retaining the statistical physical properties of the turbulent system.