We’re happy to report that our ‘Neural Global Transport’ paper titled “Learning to Estimate Single-View Volumetric Flow Motions without 3D Supervision” has been accepted to ICLR’23. We train a neural network to replace a difficult inverse solver (computing the 3D motion of a transparent volume) without having ground truth data. With the help of a differentiable flow solver, this works surprisingly well.

You can find the full paper on arXiv: http://arxiv.org/abs/2302.14470

And the source code will be available here soon: https://github.com/tum-pbs/Neural-Global-Transport

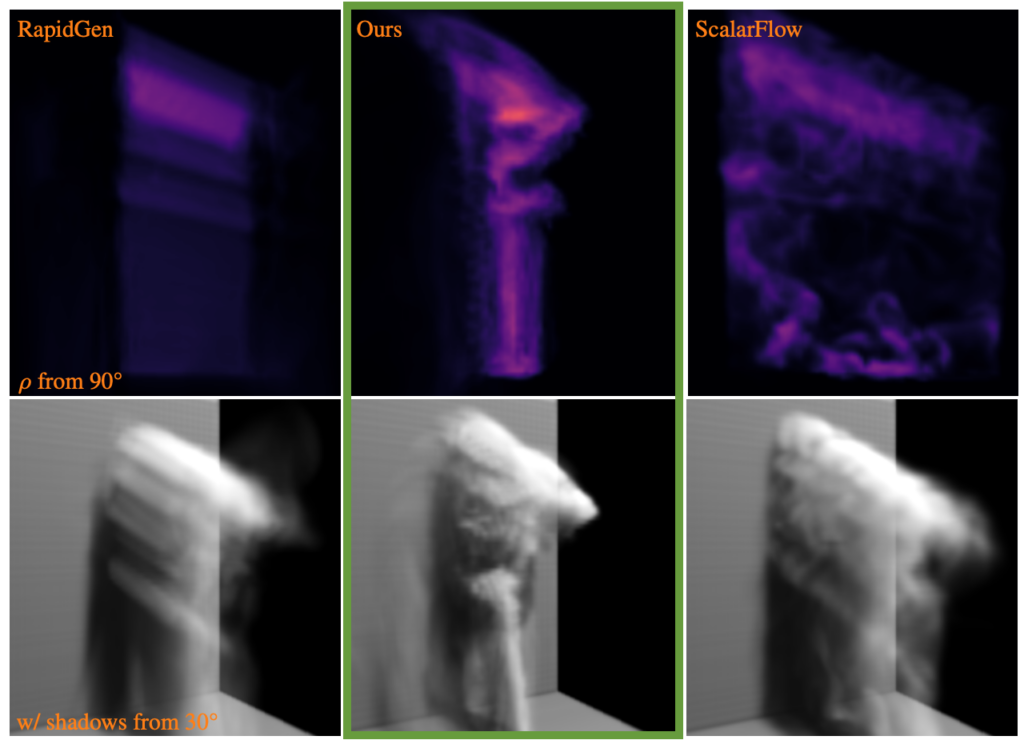

Full abstract: We address the challenging problem of jointly inferring the 3D flow and volumetric densities moving in a fluid from a monocular input video with a deep neural network. Despite the complexity of this task, we show that it is possible to train the corresponding networks without requiring any 3D ground truth for training. In the absence of ground truth data we can train our model with observations from real-world capture setups instead of relying on synthetic reconstructions. We make this unsupervised training approach possible by first generating an initial prototype volume which is then moved and transported over time without the need for volumetric supervision. Our approach relies purely on image-based losses, an adversarial discriminator network, and regularization. Our method can estimate long-term sequences in a stable manner, while achieving closely matching targets for inputs such as rising smoke plumes.