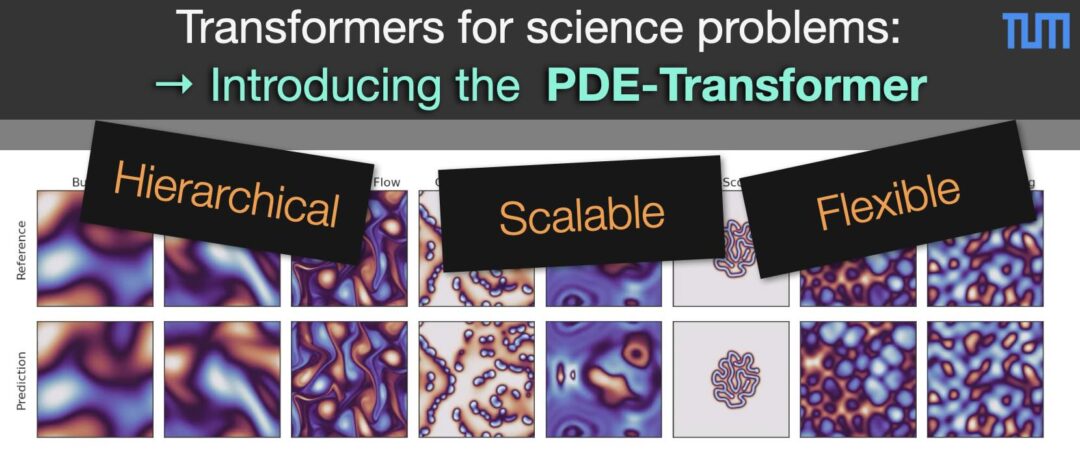

Our new NN architecture tailored to scientific tasks is online now, ready to be presented at ICML. It combines hierarchical processing (UDiT), scalability (SWin) and flexible conditioning mechanisms. Our ICML paper shows it outperforming existing SOTA architectures thanks to its custom combination of vision tricks. Code is up, everything ready to be presented in Vancouver by Benjamin soon 😁

Paper, code and tutorials are available at https://tum-pbs.github.io/pde-transformer/landing.html

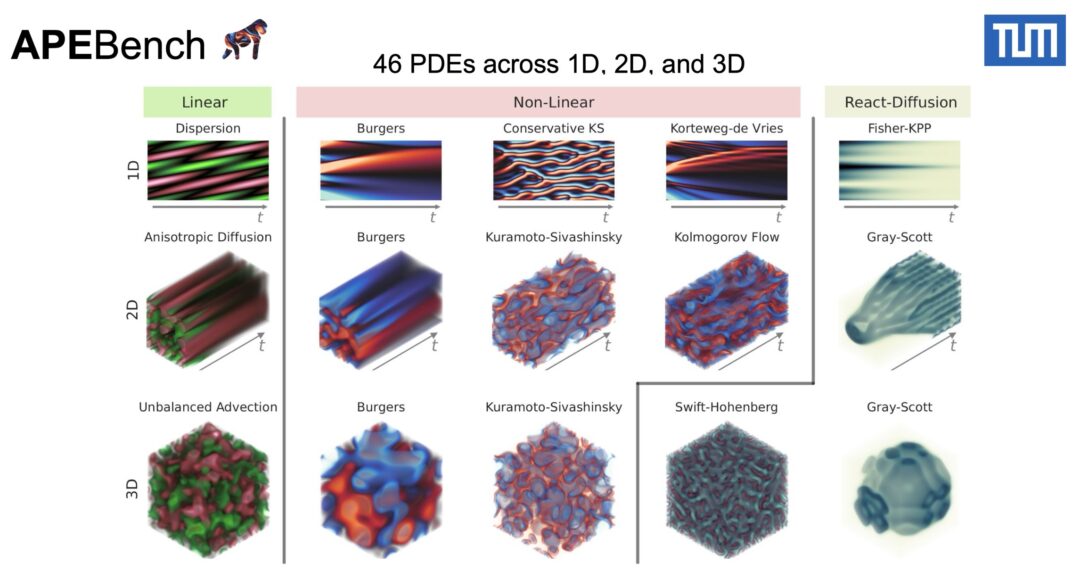

Full abstract: We introduce PDE-Transformer, an improved transformer-based architecture for surrogate modeling of physics simulations on regular grids. We combine recent architectural improvements of diffusion transformers with adjustments specific for large-scale simulations to yield a more scalable and versatile general-purpose transformer architecture, which can be used as the backbone for building large-scale foundation models in physical sciences.

We demonstrate that our proposed architecture outperforms state-of-the-art transformer architectures for computer vision on a large dataset of 16 different types of PDEs. We propose to embed different physical channels individually as spatio-temporal tokens, which interact via channel-wise self-attention. This helps to maintain a consistent information density of tokens when learning multiple types of PDEs simultaneously.

Our pre-trained models achieve improved performance on several challenging downstream tasks compared to training from scratch and also beat other foundation model architectures for physics simulations.