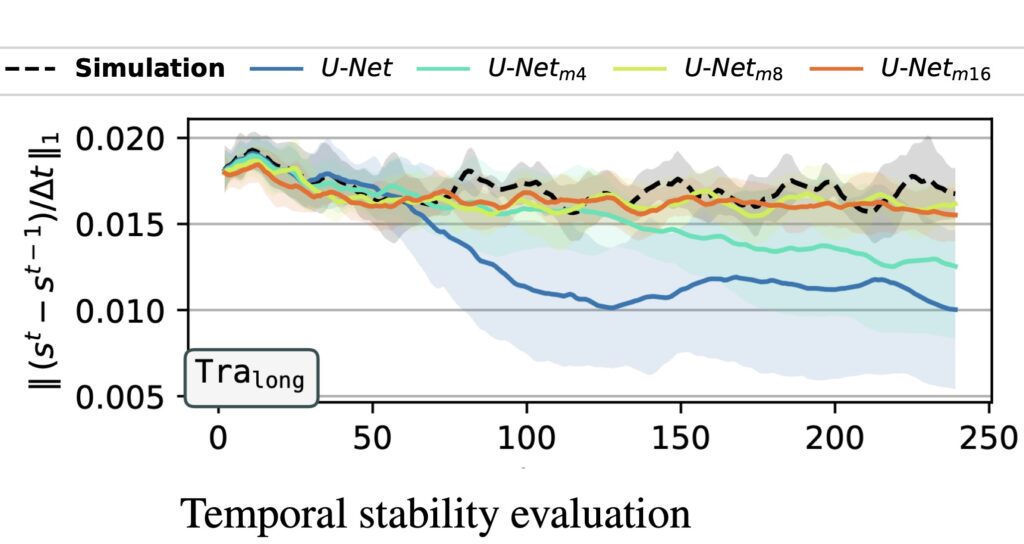

Here’s another interesting result from the diffusion-based temporal predictions with ACDM: the diffusion training inherently works with losses computed on single timesteps, but is as stable as a model trained with many steps of unrolling; 16 are needed here:

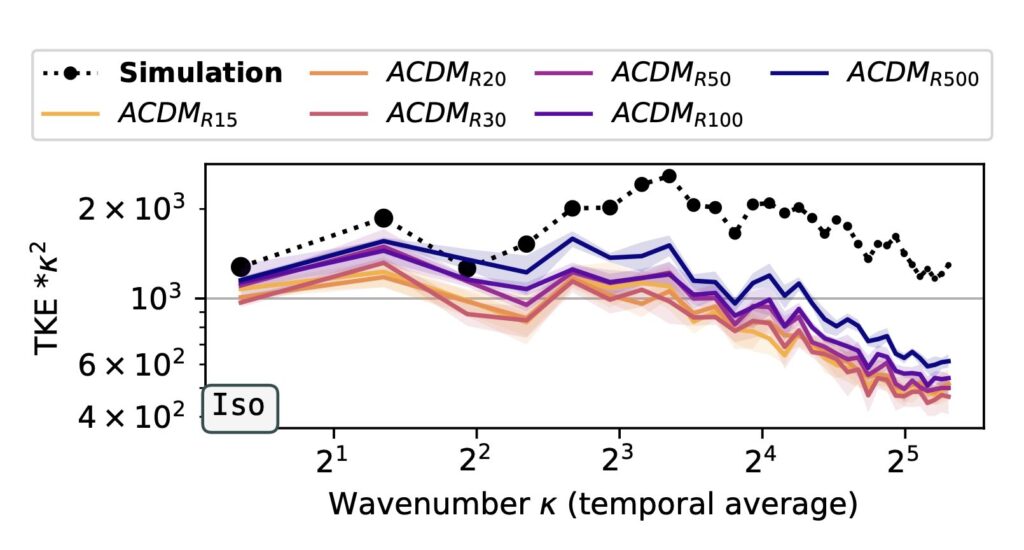

We were also glad to find out that the diffusion sampling in the strongly conditioned-regime of temporal forecasting works very well with few steps. Instead of 1000 (or so), 50 steps and less already work very well:

The corresponding project page is this one, and the source code can be found at: https://github.com/tum-pbs/autoreg-pde-diffusion.