Nils (Thuerey) will give several talks on talks about differentiable simulations and deep learning for physics problems and PDEs in the next weeks. Here’s a preview, we hope to see some of our website visitors during these talks! Here’s a preview for some of them….

- At CMU on Feb. 10, AI Institute seminar of the “NSF AI Planning Institute for Data-Driven Discovery in Physics”: https://www.cmu.edu/ai-physics-institute/events/index.html

- At the University of Arizona on Feb. 11, in the “Applied Math Colloquium”: https://appliedmath.arizona.edu

- At the MDSI Workshop: Computational Material Design Powered by Machine Learning at TUM on Feb. 24: https://www.mdsi.tum.de/mdsi/forschung/fokusthemen/cmd-ml-1/workshop-cmd-ml/

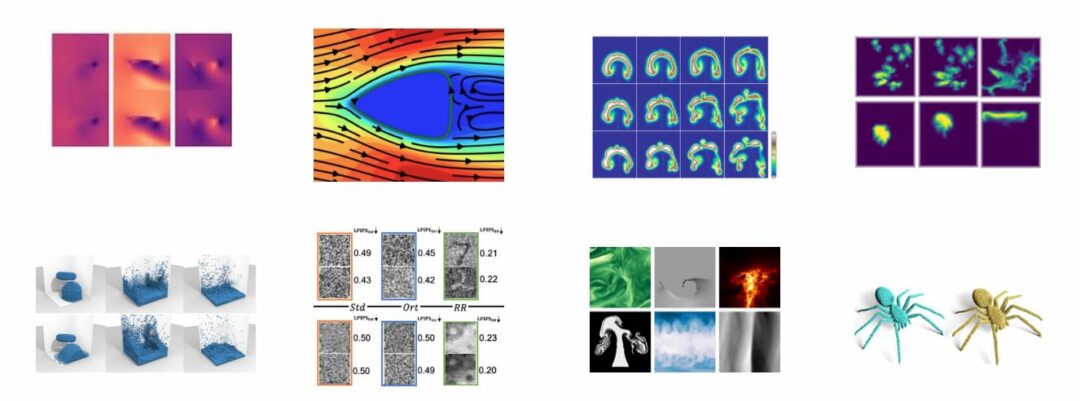

Abstract: In this talk I will focus on the possibilities that arise from recent advances in the area of deep learning for physical simulations. In this context, especially the Navier-Stokes equations represent an interesting and challenging advection-diffusion PDE that poses a variety of challenges for deep learning methods.

In particular, I will focus on differentiable physics solvers from the larger field of differentiable programming. Differentiable solvers are very powerful tools to integrate into deep learning processes. The existing numerical methods for efficient solvers can be leveraged within learning tasks to provide crucial information in the form of reliable gradients to update the weights of a neural networks. Interestingly, it turns out to be beneficial to combine supervised and physics-based approaches. The former poses a much simpler learning task by providing explicit reference data that is typically pre-computed. Physics-based learning on the other hand can provide gradients for a larger space of states that are only encountered at training time. Here, differentiable solvers are particularly powerful to, e.g., provide neural networks with feedback about how inferred solutions influence the long-term behavior of a physical model.

I will demonstrate this concept with several examples from learning to reduce numerical errors, over long-term planning and control, to generalization. I will conclude by discussing current limitations and by giving an outlook about promising future directions.