Interested in GANs that learn physical spaces and are properly conditioned by input parameters? Our SIGGRAPH paper describes how to do exactly this: https://rachelcmy.github.io/den2vel/

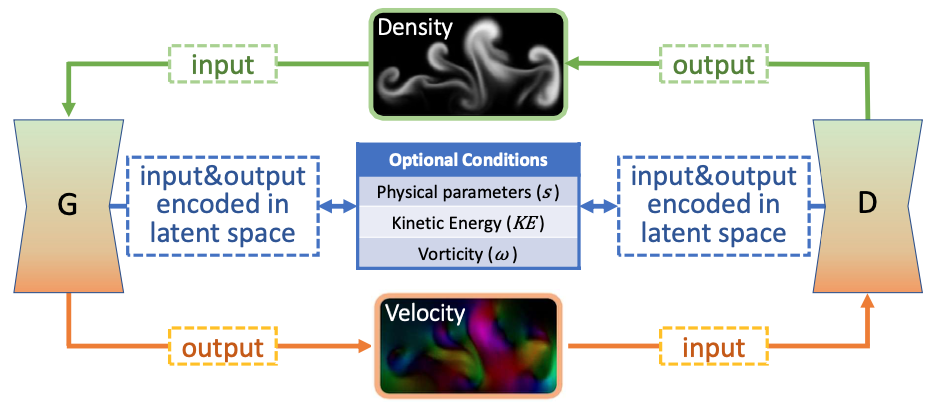

The learning process is enabled (among others) by a discriminator that self-supervises in terms of a physical quantity such as the marker density.

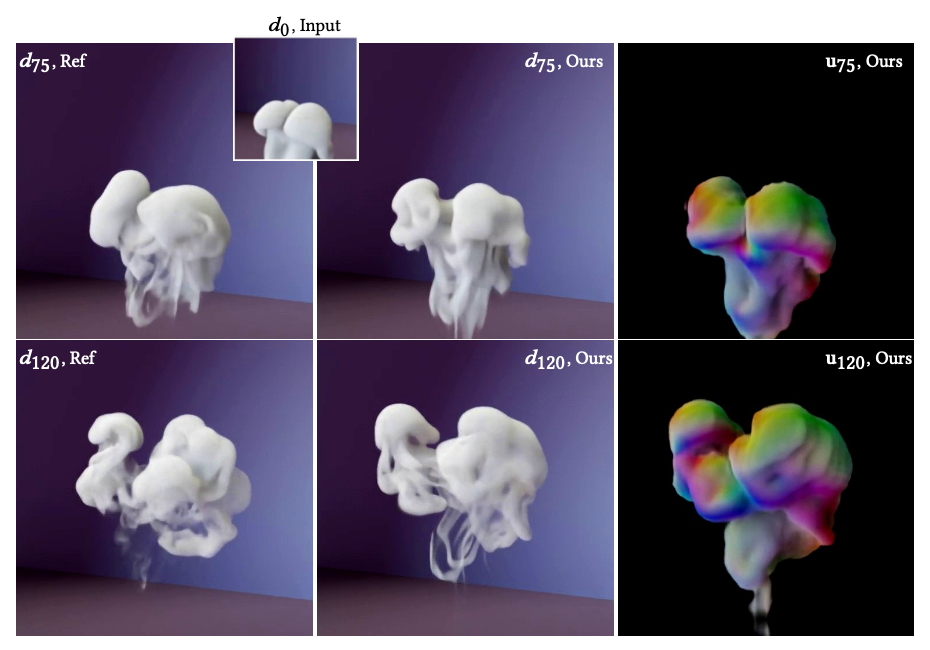

That makes it possible to obtain velocities and run a simulations purely based on a marker density. We actually couldn’t rewrite the NS equations in this way, but the generator learns it from the training data.

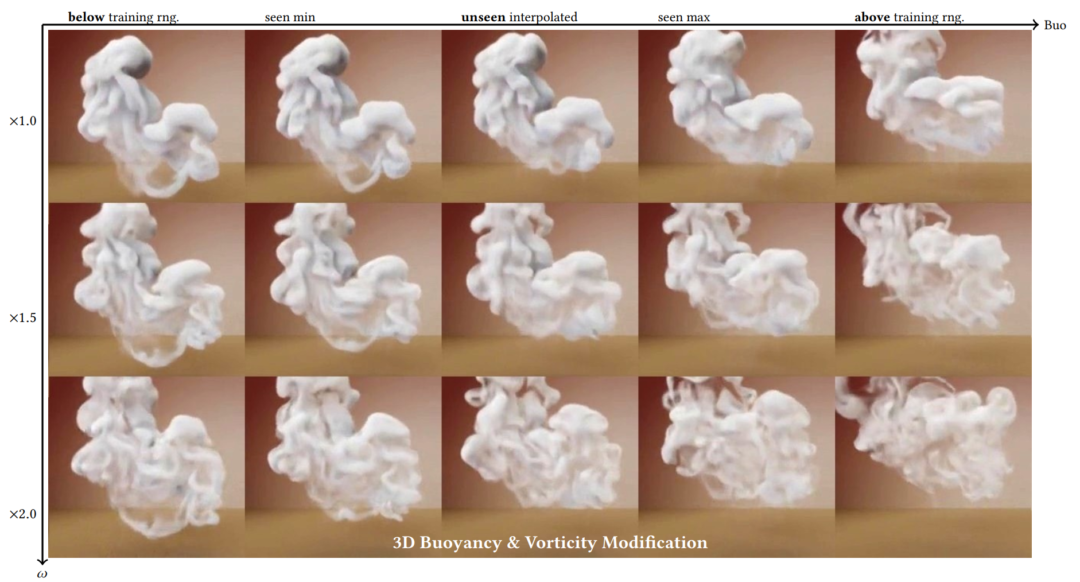

Full paper abstract: While modern fluid simulation methods achieve high-quality simulation results, it is still a big challenge to interpret and control motion from visual quantities, such as the advected marker density. These visual quantities play an important role in user interactions: Being familiar and meaningful to humans, these quantities have a strong correlation with the underlying motion. We propose a novel data-driven conditional adversarial model that solves the challenging, and theoretically ill-posed problem of deriving plausible velocity fields from a single frame of a density field. Besides density modifications, our generative model is the first to enable the control of the results using all of the following control modalities: obstacles, physical parameters, kinetic energy, and vorticity. Our method is based on a new conditional generative adversarial neural network that explicitly embeds physical quantities into the learned latent space, and a new cyclic adversarial network design for control disentanglement. We show the high quality and versatile controllability of our results for density-based inference, realistic obstacle interaction, and sensitive responses to modifications of physical parameters, kinetic energy, and vorticity.