Our paper on perceptual evaluations of arbitrary simulation data sets, or more specifically field data, is online now. We demonstrate that user studies can be employed to evaluate complex data sets, e.g., those arising from fluid flow simulations, even in situations where traditional norms such as L2 fail to yield conclusive answers. The preprint is available here: https://arxiv.org/abs/1907.04179 , and this is the corresponding project page.

Abstract

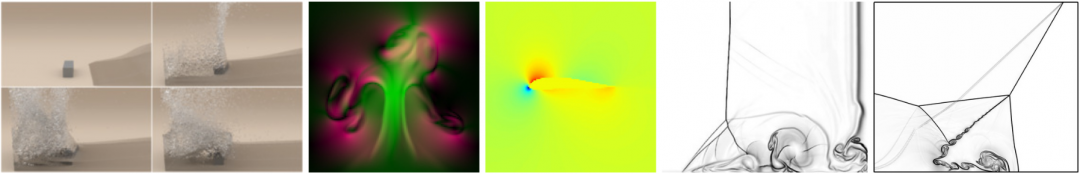

Comparative evaluation lies at the heart of science, and determining the accuracy of a computational method is crucial for evaluating its potential as well as for guiding future efforts. However, metrics that are typically used have inherent shortcomings when faced with the under-resolved solutions of real-world simulation problems. We show how to leverage crowd-sourced user studies in order to address the fundamental problems of widely used classical evaluation metrics. We demonstrate that such user studies, which inherently rely on the human visual system, yield a very robust metric and consistent answers for complex phenomena without any requirements for proficiency regarding the physics at hand. This holds even for cases away from convergence where traditional metrics often end up inconclusive results. More specifically, we evaluate results of different essentially non-oscillatory (ENO) schemes in different fluid flow settings. Our methodology represents a novel and practical approach for scientific evaluations that can give answers for previously unsolved problems.