ICLR 2019; arXiv, 1704.07854

Authors

Lukas Prantl, Technical University of Munich

Boris Bonev, Technical University of Munich

Nils Thuerey, Technical University of Munich

Abstract

We propose a novel approach for deformation-aware neural networks that learn the weighting and synthesis of dense volumetric deformation fields. Our method specifically targets the space-time representation of physical surfaces from liquid simulations. Liquids exhibit highly complex, non-linear behavior under changing simulation conditions such as different initial conditions. Our algorithm captures these complex phenomena in two stages: a first neural network computes a weighting function for a set of pre-computed deformations, while a second network directly generates a deformation field for refining the surface. Key for successful training runs in this setting is a suitable loss function that encodes the effect of the deformations, and a robust calculation of the corresponding gradients. To demonstrate the effectiveness of our approach, we showcase our method with several complex examples of flowing liquids with topology changes. Our representation makes it possible to rapidly generate the desired implicit surfaces. We have implemented a mobile application to demonstrate that real-time interactions with complex liquid effects are possible with our approach.

Links

![]() Preprint

Preprint

![]() Main Video

Main Video

![]() Supplemental Video

Supplemental Video

![]() Android App

Android App

Further Information

Having the possibility to interact with physical effects allows users to experience and explore their complexity directly. In interactive settings, users can ideally inspect the scene from different angles, and experiment with the physical phenomena using varying modes of interaction. This hands-on experience is difficult to convey with pre-simulated and rendered sequences. While it is possible to perform real-time simulations with enough computing power at hand many practical applications cannot rely on a dedicated GPU at their full disposal. An alternative to these direct simulations are data-driven methods that pre-compute the motion and/or the interaction space to extract a reduced representation that can be evaluated in real-time. This direction has been demonstrated for a variety of interesting effects such as detailed clothing, soft-tissue effects, swirling smoke, or for forces in SPH simulations.

Liquids are an especially tough candidate in this area, as the constantly changing boundary conditions at the liquid-gas interface result in a strongly varying and complex space of surface motions and configurations. A notable exception here is the StateRank approach, which enables interactive liquids by carefully pre-computing a large graph of pre-rendered video clips to synthesize the desired liquid behavior. The algorithm by Ladicky et al. also successfully proposes a pre-computation scheme for liquids, which however targets the accelerations in a Lagrangian approach in order to accelerate a full dynamic simulation.

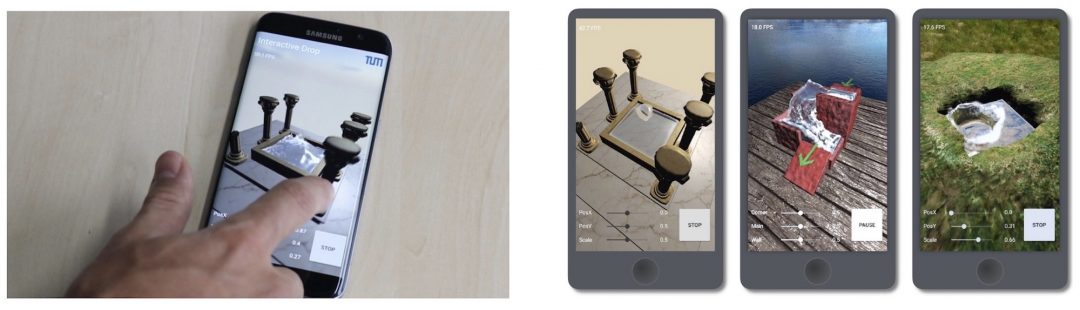

Fig. 1: Our method represents a complex space of liquid behavior, such as varying liquid drops with a novel reduced representation based on deformations. In addition to regular space-time deformations obtained with an optical flow solve, we employ a generative neural network to capture the whole space of liquid motions. This allows us to very efficiently generate new versions. As a proof of concept, we demonstrate that it is possible to interactively run this setup on a regular mobile device (screenshots above).

In the following, we will present a novel approach to realize interactive liquid simulations: we treat a (potentially very large) collection of input simulations as a smoothly deforming set of space-time signed-distance functions (SDF). An initial implicit surface is deformed in space and time to yield the desired behavior as specified by the initial collection of simulations. To calculate and represent the deformations efficiently, we take a two-step approach: First, we span the sides of the original collection with multiple pre-computed deformations. In a second step, we refine this rough surface with a generative neural network. Given the pre-computed deformations, this network is trained to represent the details within inner space of the initial collection.

For data-driven liquids, working with implicit surfaces represents a sweet spot between the raw simulation data, which for liquids often consists of a large number of particles, and a pre-rendered video. The deformed implicit surface can be rendered very efficiently from any viewpoint, and can be augmented with secondary effects such as splashes or foam floating on the surface. At the same time, it makes our method general in the sense that we can work with implicit surfaces generated by any simulation approach.

All steps of our pipeline, from applying the pre-computed deformations to evaluating the neural network and rendering the surface, can be performed very efficiently. To demonstrate the very low computational footprint of our approach, we run our final models on a regular mobile device at interactive frame rates. Our demo application can be obtained online in the Android Play store under the title “Neural Liquid Drop”, and several screenshots of it can be found above. In this app, generating the implicit surface for each frame requires only 30ms on average. The central contributions of our work are a novel deformation-aware neural network approach to efficiently represent large collections of space-time surfaces, an improved deformation alignment step in order to apply the pre-computed deformations, and a real-time synthesis framework for mobile devices. Our contributions make it possible to represent the deformation space for more than 1000 input simulations in a 30MB mobile executable.