Our paper on training recurrent Neural operators with a solver-in-the-loop was now finally officially accepted at CMAME! Congratulations Bjoern 😀 👍 In parallel, we’ve been updating the paper https://arxiv.org/pdf/2402.12971 and the source code https://github.com/tum-pbs/unrolling Feel free to give it a try, and let us know how it works!

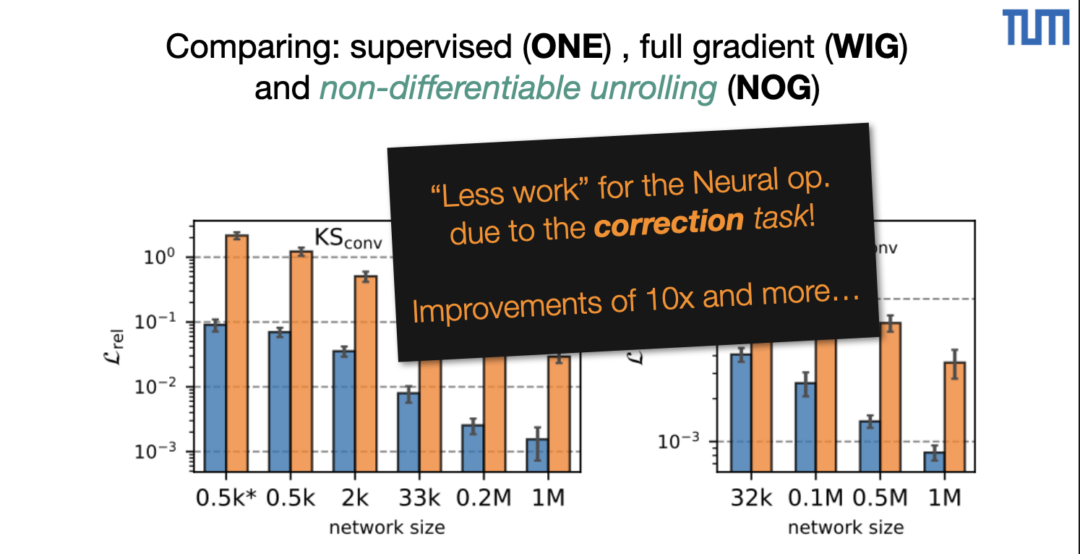

We actually have to thank the anonymous CMAME reviewers 🙏 They’ve successfully motivated us to substantially update the submission, which resulted in a big update of the paper. The theory was clarified, we included a 3D case, and with all the results being redone with relative errors the improvements are actually more substantial: moving from pure prediction to correction with unrolling yields more than 10x improvement for identical NNs.

Here’s the official CMAME link: https://authors.elsevier.com/sd/article/S0045-7825(24)00696-0